By Ryan Meyers, Chief AI Officer at Manifest Climate

Most ESG professionals, including ESG teams at financial institutions and ESG consultants, have discovered the potential of AI for their work. When we speak with ESG teams, many are actively using general-purpose tools like Claude and ChatGPT for everyday tasks and have found them genuinely helpful.

Where things start to break down is when the work shifts from productivity support to complex ESG analysis and decision-making. At that point, the question becomes less about whether AI is useful (no one can deny that), and more about whether a particular tool can be trusted for the job at hand. For some use cases, general AI works well. For others, especially where accuracy, consistency, and auditability matter, specialist tools are a better fit.

That’s where sustainability-specific AI comes in. These tools are built for sustainability work, and designed to support the level of rigor and trust that ESG analysis demands.

When should teams use generic vs. specialized AI tools?

Where generic AI tools work best

Generic AI tools come in a wide range of names and providers (ChatGPT, Copilot, Claude, Gemini, and Grok, to name a few) but they tend to work in similar ways. These are broad-purpose tools trained on general datasets often drawing from large swathes of the public internet.

Used with an understanding of their limits, they can be genuinely useful. Generic AI works well for tasks like searching for information, answering straightforward questions, summarizing documents, brainstorming ideas, drafting content, and supporting early-stage strategy work.

Where challenges emerge is around context. AI outputs are only as good as the perspective and information generic AI tools are given. To answer a sustainability question well, a model needs to be oriented in the right direction and grounded in the right criteria. That includes an understanding of how you define the problem, what standards or frameworks you’re applying, and what you already know about the company or documents being analyzed.

This is a context problem, rather than a training problem. General AI has a surface-level grasp of almost everything, but lacks the depth or grounding required for rigorous, repeatable sustainability analysis.

When specialized tools are needed

Specialized AI tools are built to close that gap. They’re designed for specific industries or functions, with sustainability-specific tools like Manifest Climate focused on clearly defined, repeatable workflows. These models are optimized for accuracy, structure, and scale, so you can work confidently within the context(s) most relevant to you.

I think of the difference between generic and specialized AI like the difference between a knife and a Cricut (a computer-controlled cutting machine designed for precision cutting on a range of materials). Both are powerful, but you wouldn’t use them in the same scenarios. You might be able to cut a complex stencil with a knife, but it’ll be much harder and the result will be far less precise. The knife can do more things, but it can’t beat the Cricut at what it’s good at. The Cricut is fit for purpose in a way that a standard knife simply isn’t.

I see three specific use cases that will each benefit from access to specialized AI tools:

1 — Benchmarking

Comparing company performance against peers, leaders, or an industry as a whole. Benchmarking insights can be a powerful way to build the case for sustainability action.

Generic AI tools struggle here because their approach to benchmarking is not designed for scale and requires consistent logic across many companies and documents.

2 — Comparison against standards, frameworks, or regulatory requirements

Understanding sustainability reporting compliance, or measuring company disclosure against best-practice standards and frameworks, such as CSRD, CDP, GRI, ASSB, or California Climate Rules. Without a clear understanding of how requirements are structured or how they should be interpreted in practice, generic AI tools often misread compliance.

3 — Custom analysis

Analyzing documents or company information with a specific or proprietary lens, powering work such as portfolio monitoring or market research. It’s hard to apply a prompt for detailed custom analysis criteria with a generic LLM. You might be able to get there by asking specific questions in succession for a single company, but this becomes almost impossible to do at the scale most ESG teams require.

Scenario: Generic vs. specialized AI for peer benchmarking

Let’s consider the following scenario:

You are a sustainability analyst looking to compare the ESG performance of two different companies in the semiconductors sector, Nvidia and Broadcom. You upload both companies’ sustainability reports (which are each +40 pages long) to ChatGPT and ask it questions.

ChatGPT sounds confident in answering your questions, but when you compare what it tells you against the source documents, you’ll find that the answers it provides are typically only 50% correct at best. If you ask the same question twice, you’re likely to get a different answer each time. If you ask for a year-on-year comparison, you won’t get an apples-to-apples comparison. And if you try to ask multiple questions across multiple different documents, you’ll soon see that it all but breaks from the pressure.

Using ChatGPT vs. Manifest Climate to understand how two companies use the GHG Protocol to determine greenhouse gases

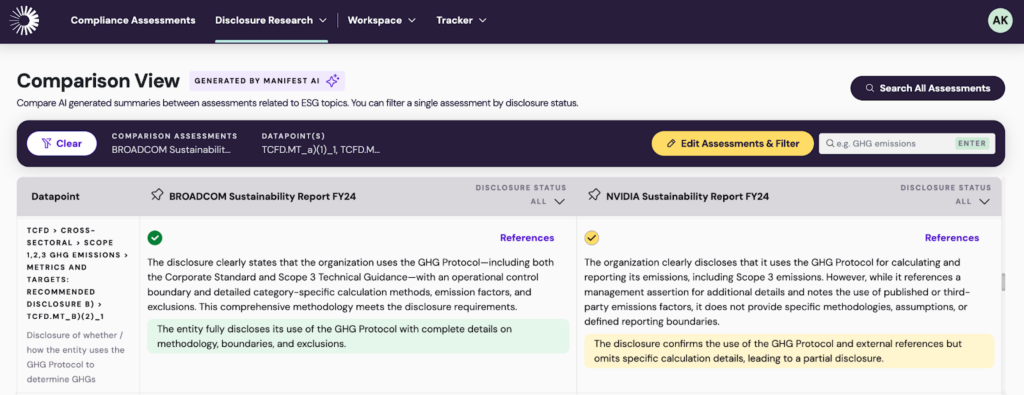

In this example, I’ve used Manifest Climate to compare two companies, Broadcom and NVIDIA, on their disclosure around GHG Protocol usage.

Manifest Climate allows me to compare the two companies directly against this particular TFCD recommendation.

You can watch a quick video of me comparing five different companies against TCFD data points below.

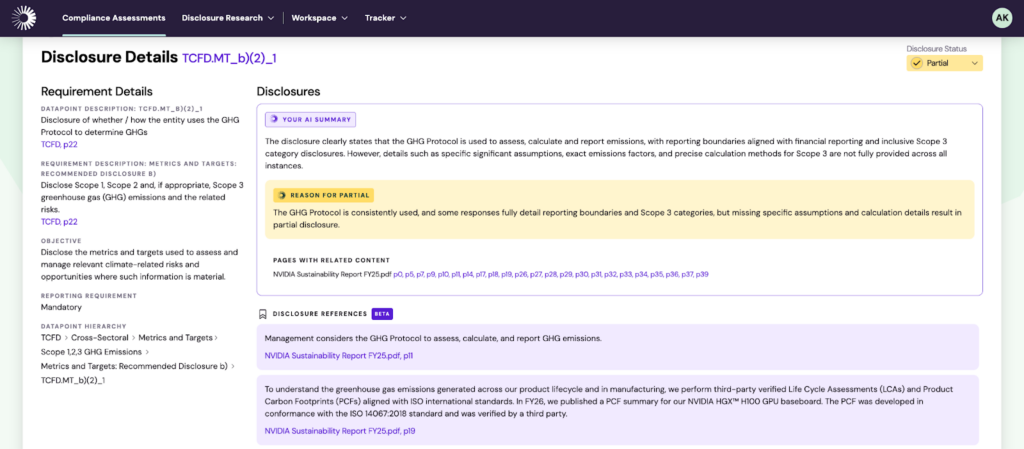

Manifest Climate shows: A detailed disclosure for Broadcom that meets TCFD governance and metrics requirements, and a partially met disclosure for NVIDIA. For NVIDIA, the platform provides clear context on where specific calculation methodologies are omitted, enabling users to drill down into the gaps and understand precisely which TCFD requirements are not fully addressed. Manifest Climate can benchmark multiple companies at once (from a handful like this example to hundreds at once) analyzing detailed datapoints across regulatory frameworks and custom methodologies.

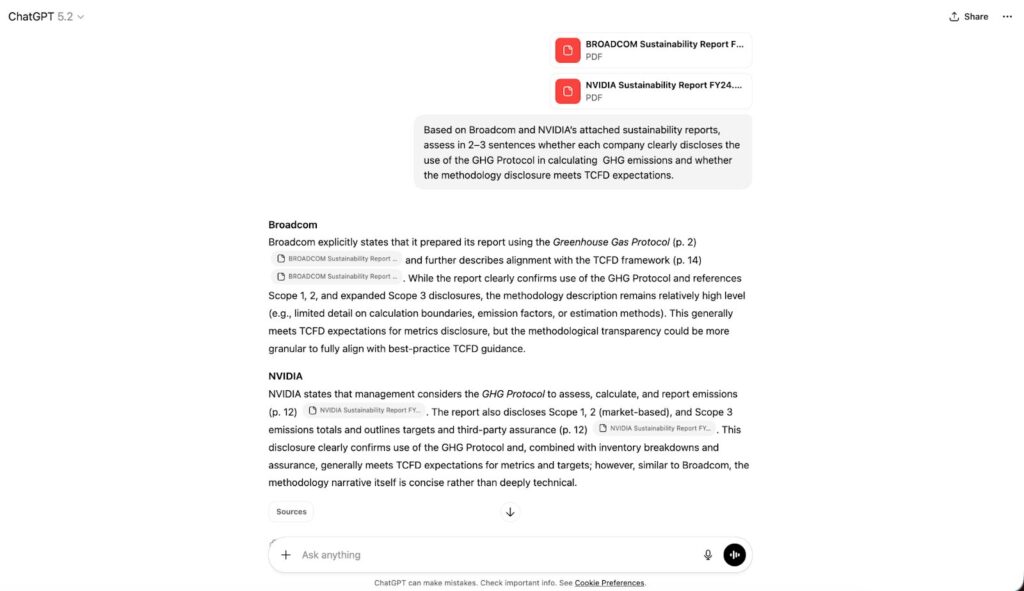

Meanwhile, when I attempt the same analysis in ChatGPT, I don’t get nearly the same output.

ChatGPT shows: A generalized summary without explicit compliance judgments, making it unclear whether the disclosure meets TCFD requirements or where gaps remain.

The takeaway here is that while ChatGPT is a handy tool in certain situations, a task like detailed sustainability research and analysis requires a kind of rigour and scale that ChatGPT struggles to reach. Manifest Climate’s assessment is more precise, aligned with updated regulatory guidelines, and based in evidence that can be traced back to the source. With Manifest Climate, I can also repeat this same analysis or comparison over and over again, swapping out my companies of choice. If I tried that in ChatGPT, I’d likely get very different outputs each time.

What’s at stake when sustainability professionals rely on generic AI tools for specialist tasks

1 — Accuracy and reliability

Confidence scores and hallucinations

We’ve all experienced a tool like ChatGPT confidently and professionally producing completely fabricated results. This is because generic AI always sounds confident, no matter how accurate the results actually are. While it’s possible to get confidence scores from generic AI, these are unreliable at best. It can’t actually provide true and robust confidence scores to give you an indication of how much you should trust the answer. A tool like Manifest Climate, however, will always give confidence scores on its outputs.

The most obvious example of this is hallucinations, which most of us have experienced at some point. While it can be amusing in some contexts, there is far too much at stake when conducting tasks like benchmarking, disclosure gap analyses, or other custom analyses for this to be an acceptable trade-off of using a low-cost tool.

Guardrails

Even when an LLM has the right context (say, it understands your custom ESG screening criteria and can perfectly read the PDFs you upload), LLMs have a habit of occasionally not listening to instructions. To prevent LLMs from doing this, models need guardrails. These are programmatic or code-based checks on the model’s outputs, which ensure that it is not going outside the bounds of what it’s trained to do.

Generic AI tools don’t have these, but a tool like Manifest Climate does. If you’re using Manifest Climate and our LLM quotes a line from a company’s sustainability report, the guardrails will check to see that the quote does in fact exist on the page the LLM says it does. If there’s not at least a 90% match between what the LLM has extracted and what’s on the page, our software will redo the whole assessment for that data point.

Guardrails like these are essential protection against hallucinations when the stakes for accuracy are high. Like a Cricut keeping the blade in precise locations, guardrails make sure the outputs are accurate for your specific purpose. This is a feature unique to specialized AI, since generic AI is designed for general use.

Capturing the right context

For ESG teams, the challenge is making sure their AI tools are looking for the right signals. This is the ‘context’ discussed above. That context might come from regulatory requirements, disclosure frameworks, or internal criteria (like how your organization identifies alpha or puts its own slant on interpreting CSRD).

Generic AI tools struggle here because they don’t know which data points matter most to you. With Manifest Climate, our team builds the prompts based on our clients’ custom needs. These custom prompts embed your view of the world or your particular way of screening/analyzing/monitoring into our models.

Precision engineering

Like a Cricut, specialized AI tools are precision-built for a specific purpose. Manifest Climate uses robust document extraction to capture the full context of ESG materials, then structures that information so the model has exactly what it needs to produce accurate, reliable insights.

Extracting knowledge from PDFs

Another area generic AI models often struggle with is extracting knowledge from PDFs. Even ChatGPT and Claude can have trouble with complex documents, especially those that are heavily designed or that include tables, footnotes, graphics, or scanned pages. PDF extraction is a technical challenge, and when the model can’t fully read a document, the user ends up with a ‘garbage in, garbage out’ scenario. If the AI doesn’t have reliable access to the underlying content, it lacks the context needed to answer questions about the company or disclosure accurately. Regardless of how well you prompt it, it can’t give you what you want.

2 — Security and confidentiality

Generic AI platforms are rarely designed for handling sensitive ESG data. Entering information through ChatGPT or Claude by default carries risk that proprietary or confidential information can be used for model training, meaning that those model providers can bake your prompts into their AI’s knowledge base in subsequent model training. There are methods to reduce this risk, such as turning this setting off in Account Settings, but there is inherently a large amount of trust that these model providers do not misuse your proprietary data.

3 — Lack of scale

General AI tools are designed for one-off interactions, not repeatable analysis at scale. The limitations become more obvious as the scope grows. When teams try to run the same questions across multiple companies, documents, or reporting periods, consistency breaks down. Teams are forced to manually check and reconcile to the point where it can take more time, not less, to conduct analyses.

Token limits

You also run into context limits, where the model reaches the maximum amount of text it can process in a single interaction. This means that general AI can bump into issues pretty quickly when it comes to analysis of large amounts of text. ChatGPT provider OpenAI and others provide solutions to use technology such as Retrieval Augmented Generation (RAG) to fix this issue, but that presents a host of new challenges that can put the quality of results at risk. So, using general AI often turns into a game of whack-a-mole, with users trading off one problem for another.

The future of AI tools for sustainability

The next generation of AI tools for sustainability will bring two worlds together. They will combine the accuracy and specificity of domain-built models with the ease and familiarity of a conversational interface.

Specialized tools don’t exist in isolation. Just as a Cricut wouldn’t exist without the invention of the knife, ESG-specific platforms like Manifest wouldn’t exist without advances in general-purpose AI. Generic models laid the foundation by showing what was possible. Specialized tools take that capability and apply it with intent.

That’s already starting to happen through Manifest Climate’s agent. The specialized AI enables users to create entire customized datasets at scale, with high quality, accurate, traceable data at its heart. Then, the agentic layer, like ChatGPT for custom ESG data, sits on top of their own data and enables users to ask questions that are informed by high quality insights rather than its outdated or generalized, baked-in knowledge.

You can delegate tasks in natural language, ask questions across the companies, documents, and datapoints you care about, and get source-traceable insights aligned with your preferred standards or custom methodologies. That intelligence lives natively inside Manifest Climate, and can also be accessed through tools ESG teams already use, including integrations with platforms like Microsoft Copilot.

The work ESG professionals do has never mattered more. The right AI tools can help them do it with greater clarity, confidence, and impact.